In my last two blog I have given detail about Teamcenter Translation Framework and its Configuration. In this blog I will provide about customization and creating new translator service. As discussed in Translator Framework, the three main components are Dispatcher Client, Module and Scheduler. For any customization this component required to be customized. In all most all cases Dispatcher and Module required to customize. Where as in Dispatcher client you required to right a code for extracting or loading or both and for Module required to define calling exe or bat fill which really do translation. Also required to configured translator.xml as discussed in my earlier configuration blog.

Customization Steps:Let take a example of one of Translation Use case where we required to translate one dataset content from one language to other and upload the translated file to same attach revision with specific relation and dataset type. Now the requirement is to make this relation type and dataset type to be configurable. Steps required be performed are as follows.

1) Extracting file to be translated from Teamcenter.

2) Translating file to required language either using custom language translator or third party translator like Google Translator.

3) Upload the file back to teamcenter by creating dataset and upload the file. Attach dataset with given relation to Itemrevision.

Step 1 and 3 will be part of Dispatcher Client whereas step 2 will be part of Module implementation.

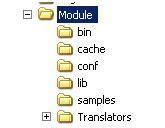

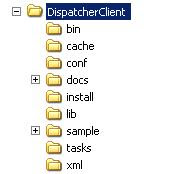

Dispatcher Customization:Dispatcher provide java based out of box implementation for extract from TaskPrep for extracting specific data from teamcenter. Also it provides OOTB Data Loader for loading of output file automatically. This auto behavior can be controlled without writing piece of code by configuring Translator.xml for the specific translation service. The entire class document related to Dispatcher Client can be found under docs folder inside Dispatcher Client root directory. Also sample implementation can found in sample folder under Dispatcher Client root directory.

Implementation:

Dispatcher Framework provides two main interfaces for customization the translator.

1) Taskprep class for extracting the file from Teamcenter. Implementation to prepare task to submit to Translation serverice.

2) DatabaseOperation class for loading the translated files to Teamcenter.

Usually both the class required to implemented for new translation service. Taskprep is the first called when a translation request is created and Dispatcher then find the specific task prep implementation for correspondent translation service request. Once the Translation is done by Module ,the dispatcher invoke DatabaseOperation implementation for the given service for upload of data to team center. In our example if we required to convert text document from one language to other then the task prep with first extract Document from target Dataset and put the file in staging location. Once the module complete the translation the Database Operation class will be invoked and the translated file will be uploaded to Teamcenter with specified dataset type and attach to target object with relation.

Extraction Implementation :

Extraction Implementation : Taskprep implementation is done by extending com.teamcenter.ets.extract.TaskPrep which is an abstract implementation of extraction. The abstract class has some member variable which encapsulates all detail of translation request as well staging directory location. Some of key member are

request: The request object for the current extract session

stagingLoc : The staging location in which all the files will be place.

The function which is called execution is prepareTask(). It is defined as abstract for Taskprep class and required to be implemented by all extending classes.

prepareTask() is function required to be implemented.

Pseudo implementation: Usually in implementation we access the target object called primary object and its associate object called secondary object through current request object. Following are pseudo code for same.

m_PrimaryObjs = request.getProperty("primaryObjects").getModelObjectArrayValue();

m_SecondaryObjs = request.getProperty("secondaryObjects").getModelObjectArrayValue();ModelObject are SOA base wrapper class for all Teamcenter objects . primaryobject are object in teamcenter which selected for Translation and secondary object are those object which are associated with target through relation. For example in case of language translation we decided to that text file can be target object (Primary object) and the Item Revision to which is associated will be then secondary object.

Once we get the primary object then the Name reference file required to be extracted from dataset and put in staging location. This is done through SOA call to teamcenter. Sample code snippet

Dataset dataset = (Dataset) m_PrimaryObjs[i];

ModelObject contexts[] = dataset.get_ref_list();

ImanFile zIFile = null;

for(int j = 0; j < contexts.length; j++)

{

if(!(contexts[j] instanceof ImanFile))

{

continue;

}

zIFile = (ImanFile)contexts[j];m_InputFile = TranslationRequest.getFileToStaging(zIFile, stagingLoc);Also required to create a Translation request detail, which is referred by Module for translation. For example in Language translator usecase we would required to have option where user can provide from which language to which language a translation is required. This is done by having Translator Argument while creating translation request by user. This can be retrieve and further process in Taskprep. The snippets for accessing the argument are as follows.

Map translationArgsMap = TranslationRequest.getTranslationArgs(request);This Map contains an Argument as key and its value as value. Also Taskprep can create its own argument based on process which can be used by module or database loader for further processing.

translationArgsMap.put(argumentKey, argumentValue);For example in Language Translator we would required to change the character set to specific value based on translated Language selected by user.

The translation request detail is created as xml file which resides in staging director under unique task id. The sample will look like this

Basically this xml contain user argument which required for Translation. For example above the option provide from and to language. This is used by module for translating. Also it has detail of corresponding dataset, item and item rev. This can be populated but not always required as in above example we also population uid of primary and secondary object which can be used for loading the translated file to with specific relation to an object.

Loader Implementation: Database loader is implemented by extending com.teamcenter.ets.load.DatabaseOperation abstract class. Load() function required to be implemented for a translation service. The DatabaseOperation class has Translationdata transData attribute which encapsulate all translated request data. From translation data we can get all information we populate during extraction (taskprep). For example from Transdata you can get result file list from translation. This help for loader to load all file in teamcenter. The pseudo code for same will

TranslationDBMapInfo zDbMapInfo = transData.getTranslationDBMapInfo();

List zResultFileList = TranslationTaskUtil.getMapperResults(zDbMapInfo, scResultFileType);Where TranslationTaskUtil is utility class provide various generic facility. ScResultFileType is expected file type for translation. User and Taskprep option can be access through TranslationTask which is a member of DatabaseOperation. The pseudo code for same will

TranslatorOptions transOpts = transTask.getTranslatorOptions();TranslatorOptions provide encapsulation of all option with Key and Value pair. The map can be access through

Option transOption = transOpts.getOption(i);

if(transOption.getName().equals("SomeoptionName"))

strOutputType = transOption.getValue() ;Uploading of all result file is done through SOA call to Teamcenter. Dispatcher Framework provides various helper class which encapsulate the SOA call to Teamcenter. If requirement can’t be fulfilled through helper class then SOA can directly be called for the same. 0ne of helper class is DatasetHelper class which provides all functions related to dataset. One of function is which create new dataset for all result file list and attach it to primary or secondary target with a given relation. The pseudo code for same will be

zat

dtsethelper.createInsertDataset((ItemRevision)secondaryObj, (Dataset)primaryObj, “datasettype” , “releationtype”, “namereferencetype”, “resultdir”, “filelist”, flag to insert to source dataset or itemrevision);Jar File Packaging and Dispatcher configuration:

We required to create a Jar file for the custom dispatcher code. Also in JAR file should have a property file which defines implemented Taskpreperation and Database loader class initiated through reflection mechanism in dispatcher framework. This is sample property file.

Translator.”serviceprovide”.”translation servicename”.Prepare=packagename.TaskPrep class name

Translator. .”serviceprovide”.”translation servicename”.Load= packagename.DatabaseOperation class nameService provider is the name of service provided, for example OOT translation service it is Siemens. It is configure as preference called ETS.PROVIDERS. Translation Servicename is the name of Translation service which configure in Module config and in Teamcenter preference ETS.TRANSLATORS.

In case of Language translation usecase service provider name can exampletranslation and service name can be examplelanguagetranslation. The content for property for this will be

Translator. ExampleTranslation examplelanguagetranslation.Prepare=packagename.LanguageTransTaskPrep class name

Translator. ExampleTranslation.examplelanguagetranslation.Load= packagename.LanguageTransDatabaseOperation class name

Once the JAR package is created it required to put is DispatcherClient\lib folder. Also service.property under DispatcherClient\config folder required to be update with the property file name

import TSBasicService,TSCatiaService,TSProEService,TSUGNXService,TSProjectTransService, ourpropertyfilename

This will load all classed when DispatcherClient is started.

Module Customization: Module customization usually has following steps.

1) Create Translation programs or indentify third party application which is used for translation.

2) Usually we create a wrapper of bat file to run the Program. This is not required but most of the time we required to set some environment like Teamcenter root or Tc data.

3) Add the service detail in Translator.xml under Module\conf regarding the service.

Let take our example usecase of Language Translator. We are going to use third party translator for example Google Translator for it. There are some Java API which invoke remote google translator. We will required to create a Java wrapper on top of this API to create our Teamcenter Translator. Our Program will take input file , outputfile with location, language from which it will be translate and to language which required to translated.

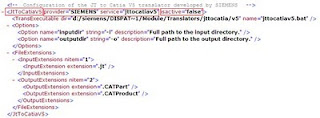

Probably we also required to create bat file for setting a JAVA_Home and other env variable. This bat will also take this four parameter required for our program. Our sample config required to be added inTranslator.xml is as follow

Where ModuleRoot is a key word belong to module base directory.

The above config when read by module framework will convert to call

$ModuleRoot/Translators/examplelanguagetranslation/examplelanguagetranslation.bat –input=”absolute file location” –outputdir=”outputdir” “from_lang value” “to_lang value”

Also a workflow action handlercan be created to integrate a Language Translation with Change Management and other business process. ITK api required to be used is

DISPATCHER_create_request.Handler can implemented in such a way that it can take a argument of Translation Provider, service name and various options supported by translation service. This will provide the flexibility to reuse the handler for other translation service.

This is from my side on Teamcenter Translation framework. Hope it might be helpful for people who want to quick start on translation service. Any comments are welcome.

See Also :

Dispatcher FrameworkConfiguring Translator